Synchronization of Concurrent Execution

The less concurrent parallel running processes have to be synchronized, the better. Each synchronization means an increase in complexity and thus the probability of writing incorrect code is significantly increased. Each synchronization requires the processing of additional code segments, which often significantly reduces the processing speed of an application. Conversely, the distribution of specific functions to different tasks can significantly increase the responsiveness of an application, because the correct choice of the priority and appropriate selection of common data can significantly reduce the cycle time of certain tasks. However, the effort required to synchronize the various tasks must never be forgotten.

Note

The exchange of data between different tasks can be carried out with the functions (Message Passing via Queue handling) of the CAA_MemBlockManager (MBM.XChgCreateH, MBM.XChgCreateP, MBM.MsgSend , MBM.MsgReceive, ...). Alternatively the jobs of different tasks on shared memory can be coordinated with the following functions or function blocks. These function blocks are also meant for end users and execute the task synchronization in a non-blocking way.

Tasks are always processed logically independent of each other. However, it may also be the case that several tasks have to process sub-tasks of a more complex overall problem and use certain resources, in particular data, together.

The Sema and the Bolt function blocks, are available to control such coordination work. If access to shared resources (e. g. data, procedures, devices) is to be coordinated, an instance of one of these synchronization blocks is assigned to each of these resources; the user tasks then execute a "request" statement before using an resource and, after use, a "release" statement of the assigned synchronization instance.

Note

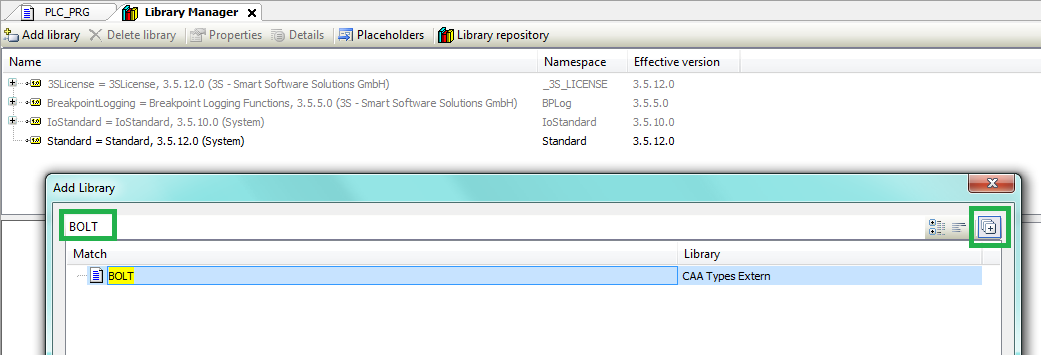

The function block types Sema and Bolt are part of the library CAA Types Extern. Please execute "Add Library" and search e. g. for the string BOLT.

A binary semaphore is usually common used for realizing mutual exclusion for a critical section. By contrast, a variable of the data type "BOLT" (see DIN 66253, Part 1) can be used to control the exclusive or simultaneous access to a critical section.

FUNCTION_BLOCK SEMA (* Prefix: sem *)

METHOD Request : BOOL

METHOD Release : BOOL

This function block realizes a counting semaphore. It is based on the runtime system function SysCpuTestAndSet. A function block of type SEMA is used to manage limited resources in a multi tasking environment. The counter of the semaphore symbolizes the number of resources available. If there is no special parametrization, the semaphore counter has the value one. The resources assigned to the semaphore are accessed with the Request method. This works as long as the counter is not zero at the time of the Request call. The counter is then reduced by one. With the method Release the resource previously accessed is released and the counter is increased by one. The call of the method Preset adjusts the initial counter of the semaphore in accordance with the requirements. If the method was executed successfully TRUE is returned, else FALSE is returned. A resource may thus only be used if the call Request returns TRUE.

Attention

A resource is not released successfully until the call Release returns TRUE.

Note

Like shown in the example below, it is also allowed to first "Release" a resource (increasing its counter by one) before having ever "Request"ed it.

Example

Single Writer (Producer) --- Single Reader (Consumer)

The "Consumer" and "Producer" are names of two different tasks. They share a global resource called "g_abyBuffer".

The "Consumer" task will process the data out of the buffer.

The "Producer" tasks will fill the buffer with some important data.

The "Producer" can not overwrite not yet readed data.

The "Consumer" can only read already produced data.

VAR_GLOBAL

// Synchronize Producers

g_semWriteLock : CAA.SEMA;

g_xWriterActive : BOOL;

// Synchronize the Consumer

g_semReadLock : CAA.SEMA := (ctPreset:=0); (* !! *)

g_xReaderActive : BOOL;

// The buffer, a memory area as a global resource

g_abyBuffer : ARRAY[0..4095] OF BYTE;

END_VAR

METHOD Consume: BOOL

VAR

byData : BYTE;

END_VAR

IF g_xReaderActive THEN

Consume := g_semWriteLock.Release();

g_xReaderActive := NOT Consume;

RETURN;

END_IF

IF NOT g_xReaderActive AND_THEN g_semReadLock.Request() THEN

(* do some stuff with the data *)

byData := g_abyBuffer[0];

Consume := g_semWriteLock.Release();

g_xReaderActive := NOT Consume;

ELSE

Consume := FALSE;

END_IF

METHOD Produce : BOOL;

VAR_IN_OUT

abyData : ARRAY[*] OF BYTE;

END_VAR

IF g_xWriterActive THEN

Produce := g_semReadLock.Release();

g_xWriterActive := NOT Produce;

RETURN;

END_IF

IF NOT g_xWriterActive AND_THEN g_semWriteLock.Request() THEN

(* take the data and prepare the buffer ``g_abyBuffer`` *)

Produce := g_semReadLock.Release();

g_xWriterActive := NOT Produce;

ELSE

Produce := FALSE;

END_IF

The "consumer" waits for the release of g_semReadLock, i. e. for processing a message, because g_semReadLock can only be released by a "producer". After the processing has been completed, a "consumer" releases g_semWriteLock, allowing further processing and waiting for a new request. A "producer" can only buffer a message if the state "free" is defined for g_semWriteLock; after buffering a message, they release g_semReadLock and thus trigger the "consumer".

Note

The SEMA function block and its methods are implemented in a non blocking way. So the task-cycle is not blocked and the PLC can do some other work while "waiting" for a semaphore.

Assume that in different tasks, the same data is used for calculation but not modified (for example, border values for monitoring processes); in addition, another task is to recalculate this data (for example, specification of new monitoring data). It should be ensured that the processes for modifying and using the data are mutually exclusive; however, simultaneous use of the data (through several tasks) is wanted.

In principle, these problems can be solved with the "request" and "release" statements described above; however, the formulation is relatively complicated and the run time for execution is considerable. For this reason, four additional methods are offered that work with instances of the function block "BOLT".

A bolt variable can assume the states "locked", "lock possible" or "lock not possible", depending on whether the assigned resource is exclusively used, free or simultaneously used.

The instance of a BOLT function block is assigned to a resource. When entering a critical section for exclusive use of this resource, the access to this critical section of other tasks is prevented by executing the Reserve method. The release when leaving this critical section is done by using the method Free. The critical sections for simultaneous use of a resource is initiated or finished by executing the methods Enter or Leave.

FUNCTION_BLOCK BOLT (* Prefix: blt *)

Methods of function block BOLT for handling simultaneous access:

METHOD Enter : BOOL

METHOD Leave : BOOL

Methods of function block BOLT for handling exclusive access:

METHOD Reserve : BOOL

VAR_IN_OUT

ctValue : COUNT;

END_VAR

METHOD Free : BOOL

This function block gives exclusive or simultaneous access to a critical section. It is based on the runtime system function SysCpuTestAndSet. Exclusive access is only possible after all simultaneous accesses have been ended. As long as there is an exclusive access, the simultaneous access calls are rejected. Simultaneous accesses are initiated with the method Enter. This means the number of accesses in the critical section is increased by one. The method Leave is required to leave the critical section. It reduces the number of accesses in the critical section by one. Exclusive access is requested with the method Reserve . This call can only be successful if the number of accesses in the critical section equals zero. If this is not the case, all new calls of the method Enter are denied to make sure the number of accesses is not increased further. If all those who previously accessed the critical section have left with the method Leave, the next call of the method Reserve will be successful. The critical section can now be accessed exclusively. When all work is done, the section has to be freed with the method Free. If exclusive access has already been granted, calls for simultaneous access are denied. Further calls for exclusive access are also denied but they are counted. The calls for simultaneous access are successful as soon as all exclusive accesses have been ended. If the method was executed successfully, TRUE is returned, else FALSE is returned. A critical section may only be accessed if the methods Enter or Reserve have returned TRUE.

Note

The Reserve method has an extra state variable (ctValue) for distinguishing a "new" (ctValue = 0) reservation from an previous one (ctValue <> 0) which is still asking for access. In this respect, ctValue can be considered a reservation ticket number. A value of zero means that no reservation has been made so far. A value not equal to zero means that a reservation has been made. The variable ctValue should be located in a memory area which is specific for the context (e.g. task) in which the call of the Reserve method occurs.

If the call of "Reserve" returns with the value FALSE it is absolute necessary to continue the reservation tries until the return value is TRUE. Without this measure every call of "Enter" will return with FALSE and so the simultaneous mode of the critical section is locked for ever.

Attention

A resource is not released successfully until the call Free or Leave returns TRUE.

Example

A task "Measurement" continuously determines the border values of variables from a process to be monitored, which are required by the tasks "Control" and "Scheduling" for calculations. It should be ensured that "Measurement" changes the the border values only if they are not used; on the other hand, the tasks "Control" and "Scheduling" should use the the border values simultaneously.

For this purpose, a instance variable of type BOLT called "g_bltBorders" is declared; in the bodies of the three tasks, the critical sections of the modification or use of the border values (g_BorderValues) are initiated or finished as follows:

VAR_GLOBAL

g_BorderValues : BORDERS;

g_bltBorders : CAA.BOLT;

g_xMeasurementActive : BOOL;

END_VAR

// Called by task "Measurement"

METHOD Measure : BOOL

VAR_IN_OUT

udiTicket : UDINT := 0; // Reservation Ticket

END_VAR

IF g_xMeasurementActive THEN

Measure := g_bltBorders.Free();

g_xMeasurementActive := NOT Measure;

RETURN;

END_IF

IF NOT g_xMeasurementActive AND_THEN g_bltBorders.Reserve(udiTicket) THEN

(* determine new border values *)

Measure := g_bltBorders.Free();

g_xMeasurementActive := NOT Measure;

ELSE

Measure := FALSE;

END_IF

// Called by tasks "Control" and "Scheduling"

METHOD Calculate : BOOL

VAR

pxCalculatorActive : POINTER TO BOOL;

END_VAR

pxCalculatorActive := GetTaskLocalStorage();

IF pxCalculatorActive^ THEN

Calculate := g_bltBorders.Leave();

pxCalculatorActive^ := NOT Calculate;

RETURN;

END_IF

IF NOT pxCalculatorActive^ AND_THEN g_bltBorders.Enter() THEN

(* read and utilize the border values *)

Calculate := g_bltBorders.Leave();

pxCalculatorActive^ := NOT Calculate;

ELSE

Calculate := FALSE;

END_IF

Note

The BOLT function block and its methods are implemented in a non blocking way. So the task-cycle is not blocked and the PLC can do some other work while "waiting" for a bolt.

If more then one Task is sharing the Calculate method a task specific location for xCalculatorActive is necessary. This location is provided in the example above from the hypothetical function GetTaskLocalStorage. It determines the current task context and provide the address of the task local variables.

Sometimes it is important that the current IEC task cannot be interrupted by other IEC tasks. The rest of the task system, however, must work on, unaffected by this requirement. In these cases, the TaskLock and TaskUnlock functions offer the possibility to build a save zone, in which common IEC resources can be consistently modified over a few lines of IEC code, unaffected by other IEC-Tasks.

FUNCTION TaskLock : ERROR

VAR_INPUT

xDummy : BOOL;

END_VAR

FUNCTION TaskUnlock : ERROR

VAR_INPUT

xDummy : BOOL;

END_VAR

These two functions using a global semaphore to synchronize IEC tasks. In this way the other tasks are suspended when they call TaskLock if the first IEC task has already called TaskLock. The suspension persists until the first task has called TaskUnlock. Therefore it is very important to limit the time spent between TaskLock and TaskUnlock to an absolute minimum. Otherwise, the reactivity of the entire application is compromised.

Example

The method PopElement in the following piece of code demonstrates a possible use case of TaskLock and TaskUnock in which a double-linked list is modified in such a way that the first element of the list is no longer connected to the rest of the list and can then be returned to the caller. The IEC code of this operation must not be interrupted by another IEC task because otherwise the list could be get in a inconsistent state.

METHOD PopElem : IElement

{IF defined (pou:CAA.TaskLock)}

CAA.TaskLock(FALSE);

{END_IF}

IF itfHead <> 0 THEN

PopElem := itfHead;

itfHead := PopElem.NextElem;

PopElem.NextElem := 0;

PopElem.PrevElem := 0;

PopElem.List := 0;

ctSize := ctSize - 1;

IF itfHead = 0 THEN

itfTail := 0;

ELSE

itfHead.PrevElem := 0;

END_IF

END_IF

{IF defined (pou:CAA.TaskUnlock)}

CAA.TaskUnlock(FALSE);

{END_IF}

Note

For compatibility reasons with PLC targets, which do not yet support the TaskLock and TaskUnlock functions, in the code snipped above we use the possibilities of conditional compiling via pragma statements.